Newly unsealed court filings allege that Meta knew for years about significant risks facing young users on its platforms, including widespread contact between minors and adults, exacerbation of mental-health issues, and the easy spread of self-harm and eating-disorder content. Plaintiffs in a sweeping national lawsuit say the company did not disclose those dangers to families or Congress, even as teen usage continued to fuel Meta’s growth.

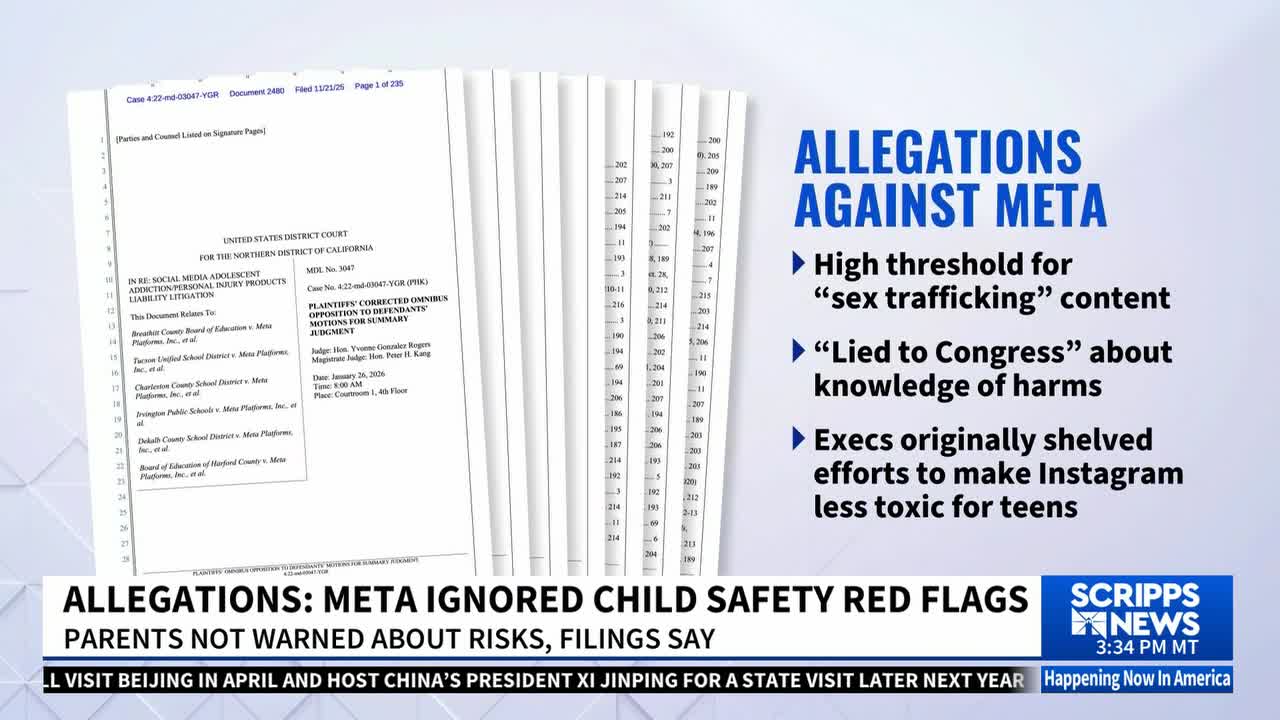

First reported by Time Magazine, allegations also detailed a high threshold for sex trafficking violations; court documents citing testimony from Instagram’s former head of safety and well-being, Viashnavi Jayakumar, who described “you could incur 16 violations for prostitution and sexual solicitation, and upon the 17th violation, your account would be suspended. By any measure across the industry, [it was] a very, very high strike threshold.”

The allegations come from a partially unsealed opposition brief filed in the Northern District of California, part of a case which spans thousands of families, school districts and state attorneys general targeting Meta, Google, TikTok and Snapchat.

The master complaint alleges the companies “relentlessly pursued a strategy of growth at all costs, recklessly ignoring the impact of their products on children’s mental and physical health.” The brief specifically alleges Meta failed to disclose these harms to the public or to Congress, and refused to implement safety fixes that could have protected young users.

The plaintiffs’ brief includes testimony from a variety of Meta staff describing an “ongoing trend of not prioritizing safety” and that the company “maintained their focus on growth” even though “[t]hey knew the negative externalities of their products were being pushed onto teens.”

Brian Boland, Meta’s former vice president of partnerships who resigned in 2020, is allegedly quoted in the brief, detailing that user safety is “not something that they spend a lot of time on. It’s not something they think about. And I really think they don’t care.”

In addition to documenting safety concerns, the filing alleges that Meta executives failed to warn Congress about these risks and misrepresented the company’s internal findings. Plaintiffs say Meta continued to design features they argue were addictive to young users, prioritizing growth, engagement, and advertising revenue over safety interventions.

A Meta spokesperson told Scripps News the allegations “rely on cherry-picked quotes and misinformed opinions in an attempt to present a deliberately misleading picture.” The company said the full record will show that Meta has “for over a decade … listened to parents, researched issues that matter most, and made real changes to protect teens,” including introducing Teen Accounts with built-in protections and expanding parental controls.

RELATED STORY | Meta prevails in historic FTC antitrust case, won't have to break off WhatsApp, Instagram

While there’s a heavy focus on Meta, the litigation makes similar allegations against Google, YouTube’s parent company, TikTok, and Snapchat, though parts of the brief are still redacted.

The brief alleges Snapchat intentionally didn’t warn parents about known dangers that exist on the platform, instead expanding “proactive reputation management” to focus on “‘PR and communications for parental perception’ over mental health research and interventions.

It also claims that while Youtube publicly supported “age-appropriate experiences for young people,” “behind closed doors, it undermined those features by ramping up efforts to increase youth consumptions.”

"Meta, Google, TikTok, and Snap designed social media products they knew were addictive to kids, and these internal company documents and testimony show they actively pushed these platforms into schools while bypassing parents and teachers," Lexi Hazam, the lead plaintiffs’ counsel in the litigation, wrote to Scripps News in a statement. "They knew the serious mental health risks to kids but provided no warnings, leaving schools and families to suffer the consequences. This is just the beginning—as the case moves toward trial, we will continue working to uncover the full extent of these companies’ misconduct and ensure that they are held responsible for the impact their platforms have had on children and teens, and the school systems that serve them."

In a statement to Scripps News, a Snapchat spokesperson wrote in part that the allegations “fundamentally misrepresent our platform. Snapchat was designed differently from traditional social media — it opens to the camera, not a feed, and has no public likes or social comparison metrics. The safety and well-being of our community is a top priority. Our goal has always been to encourage self-expression and authentic connections.”

The company recently released an update to the Family Safety Hub, and introduced an interactive course to educate teens and families about how to protect themselves about online risks.

Google and TikTok have not yet responded to Scripps News’ request for comment, but Google told Politico the lawsuits “fundamentally misunderstand” how YouTube works and called the allegations “simply not true,” pointing to safety tools built in consultation with experts.